GraphRAG:基于PolarDB+通義千問api+LangChain的知識圖譜定制實踐

Embedding模塊 提供統一的embedding能力接口,與LLM一樣,也提供不同的廠商實現,比如OpenAIEmbeddings,DashScopeEmbeddings。同樣需要集成和實現Embeddings基類的兩個方法embed_documents和embed_query。

class Embeddings(ABC):

"""Interface for embedding models."""

@abstractmethod

def embed_documents(self, texts: List[str]) -> List[List[float]]:

"""Embed search docs."""

@abstractmethod

def embed_query(self, text: str) -> List[float]:VectorStore模塊 向量存儲模塊,用于存儲由Embedding模塊生成的向量和生產向量的數據,主要作為記憶和檢索模塊向LLM提供服務。比如AnalytiDB VectorStore模塊。實現VectorStore模塊主要需要實現幾個寫入和查詢接口。

class VectorStore(ABC):

"""Interface for vector store."""

@abstractmethod

def add_texts(

self,

texts: Iterable[str],

metadatas: Optional[List[dict]] = None,

**kwargs: Any,

) -> List[str]:

def search(self, query: str, search_type: str, **kwargs: Any) -> List[Document]:Chain模塊 用于串聯上面的這些模塊,使得調用更加簡單,讓用戶不需要關心繁瑣的調用鏈路,在LangChain中已經集成了很多chain,最主要的就是LLMChain,在其內部根據不同的場景定義和使用了不同的PromptTemplate來達到目標。

Agents模塊 和chain類似,提供了豐富的agent模版,用于實現不同的agent,后面會詳細介紹。

還有一些模塊比如indexes,retrievers等都是上面這些模塊的變種,以及提供一些可調用的工具類,比如tools等。這里就不再詳細展開。我們會在后面的案例中講解如何使用這些模塊來構建自己的應用。

ChatBot是LLM應用的一個比較典型的場景,這個場景又可以細分為問答助手(知識庫),智能客服,Copilot等。比較典型的案例是LangChain-chatchat.構建ChatBot主要需要以下模塊:

TextSplitter一篇文檔的內容往往篇幅較長,由于LLM和Embedding token限制,無法將其全部傳給LLM,因此將需要存儲的文檔按照一定的規則切分成內聚的小塊chunk進行存儲。

LLM模塊 用于總結問題和回答問題。

Embedding模塊 用于生產知識和問題的向量表示。

VectorStore模塊 用于存儲和檢索匹配的本地知識內容。

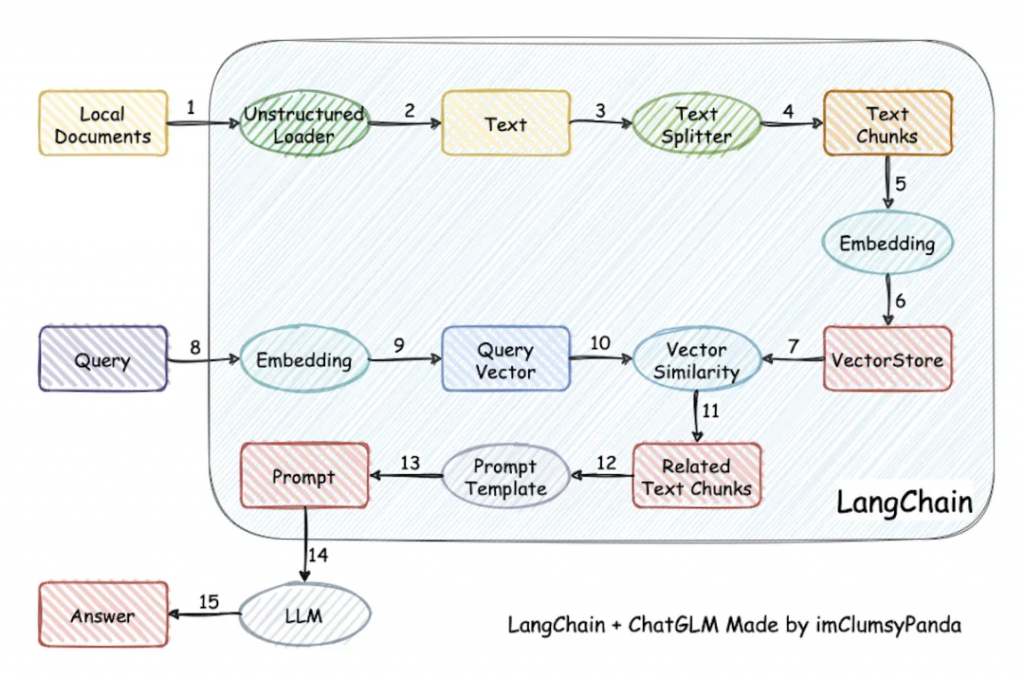

一個比較清晰的調用鏈路圖如下(比較經典清晰,老圖借用):

首先我們從Google拉取一些問答數據,然后調用Dashscope上的Embedding模型進行向量化,并寫入AnalyticDB PostgreSQL。

import os

import json

import wget

from langchain.vectorstores.analyticdb import AnalyticDB

CONNECTION_STRING = AnalyticDB.connection_string_from_db_params(

driver=os.environ.get("PG_DRIVER", "psycopg2cffi"),

host=os.environ.get("PG_HOST", "localhost"),

port=int(os.environ.get("PG_PORT", "5432")),

database=os.environ.get("PG_DATABASE", "postgres"),

user=os.environ.get("PG_USER", "postgres"),

password=os.environ.get("PG_PASSWORD", "postgres"),

)

# All the examples come from https://ai.google.com/research/NaturalQuestions

# This is a sample of the training set that we download and extract for some

# further processing.

wget.download("https://storage.googleapis.com/dataset-natural-questions/questions.json")

wget.download("https://storage.googleapis.com/dataset-natural-questions/answers.json")

# 導入數據

with open("questions.json", "r") as fp:

questions = json.load(fp)

with open("answers.json", "r") as fp:

answers = json.load(fp)

from langchain.vectorstores import AnalyticDB

from langchain.embeddings import DashScopeEmbeddings

from langchain import VectorDBQA, OpenAI

embeddings = DashScopeEmbeddings(

model="text-embedding-v1", dashscope_api_key="your-dashscope-api-key"

)

doc_store = AnalyticDB.from_texts(

texts=answers, embedding=embeddings, connection_string=CONNECTION_STRING,

pre_delete_collection=True,

)然后創建LangChain內集成的tongyi模塊。

from langchain.chains import RetrievalQA

from langchain.llms import Tongyi

os.environ["DASHSCOPE_API_KEY"] = "your-dashscope-api-key"

llm = Tongyi()查詢和檢索數據,然后回答問題。

from langchain.prompts import PromptTemplate

custom_prompt = """

Use the following pieces of context to answer the question at the end. Please provide

a short single-sentence summary answer only. If you don't know the answer or if it's

not present in given context, don't try to make up an answer, but suggest me a random

unrelated song title I could listen to.

Context: {context}

Question: {question}

Helpful Answer:

"""

custom_prompt_template = PromptTemplate(

template=custom_prompt, input_variables=["context", "question"]

custom_qa = VectorDBQA.from_chain_type(

llm=llm,

chain_type="stuff",

vectorstore=doc_store,

return_source_documents=False,

chain_type_kwargs={"prompt": custom_prompt_template},

)

random.seed(41)

for question in random.choices(questions, k=5):

print(">", question)

print(custom_qa.run(question), end="\n\n")> what was uncle jesse's original last name on full house

Uncle Jesse's original last name on Full House was Cochran.

> when did the volcano erupt in indonesia 2018

No information about a volcano erupting in Indonesia in 2018 is present in the given context. Suggested song title: "Volcano" by U2.

> what does a dualist way of thinking mean

A dualist way of thinking means believing that humans possess a non-physical mind or soul which is distinct from their physical body.

> the first civil service commission in india was set up on the basis of recommendation of

The first Civil Service Commission in India was not set up on the basis of a recommendation.

> how old do you have to be to get a tattoo in utah

In Utah, you must be at least 18 years old to get a tattoo.在我們實際給用戶提供構建一站式ChatBot的過程中,我們依然遇到了很多問題,比如文本切分過碎,導致語義丟失,文本包含圖表,切分后導致段落無法被理解等。

#大標題1-中標題1-小標題1#:內容1

#大標題1-中標題1-小標題1#:內容2

#大標題1-中標題1-小標題2#:內容1

#大標題2-中標題1-小標題1#:內容1雖然我們做了很多優化,但是由于用戶的文檔本身五花八門,現在依然無法找到一個完全通用的方案來應對所有的數據源.比如某一切分器在markdown場景表現很好,但是對于pdf就效果下降得厲害。比如有的用戶還要求能夠在召回文本的同時召回圖片,視頻甚至ppt的slice.目前我們也只是通過metadata link的方式召回相關內容,而不是把相關內容直接做向量化。如果有同學有很好的辦法,歡迎在評論區交流。

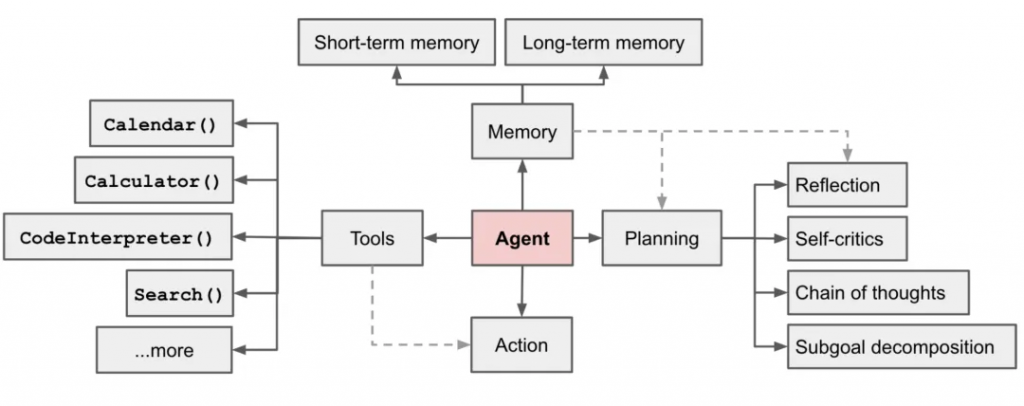

以LLM構建AI Agent是大語言模型的另一個典型的應用場景。一些開源的非常火熱的項目,如AutoGPT、BabyAGI都是非常典型的示例。讓我們明白LLM的潛力不僅限于生成寫作精彩的文本、故事、文章等;它可以被視為一個強大的自我決策的系統。用AI做決策存在一定的風險,但在一些簡單,只是處理繁瑣工作的場景,讓AI代替人工決策是可取的。

在以LLM為核心的自主代理系統中,LLM是Agent的大腦,我們還需要一些其他的組件來補全它的四肢。AI Agent主要借助思維鏈和思維樹的思想,提高Agent的思考和決策能力。

Planning

planning的作用有兩個:

Memory

短期記憶:將所有上下文學習(參見提示工程)視為利用模型的短期記憶來學習。

長期記憶:這為代理提供了在長時間內保留和檢索(無限)信息的能力,通常通過利用外部向量存儲和快速檢索來實現。

Tools

Tools模塊可以讓Agent調用外部API以獲取模型權重中缺失的額外信息(通常在預訓練后難以更改),包括實時信息、代碼執行能力、訪問專有信息源等。通常是通過設計API的方式讓LLM調用執行。

一個復雜的任務通常包括許多步驟。代理需要知道這些步驟并提前規劃。

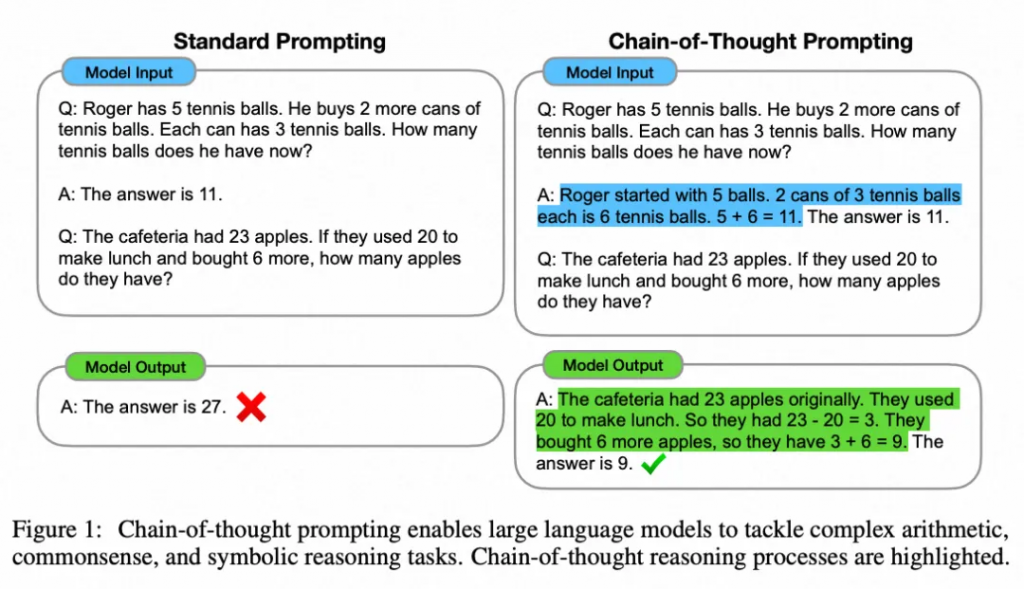

思維鏈(Chain of thought) (CoT; Wei et al. 2022)已經成為提高模型在復雜任務上性能的標準提示技術。模型被指示“逐步思考”,以利用更多的測試時間計算來將困難任務分解成更小更簡單的步驟。CoT將大任務轉化為多個可管理的任務,并揭示了模型思考過程的解釋。

思維樹(Tree of Thoughts) (Yao et al. 2023) 通過在每一步探索多種推理可能性來擴展了CoT。它首先將問題分解為多個思維步驟,并在每一步生成多種思考,創建一個樹狀結構。搜索過程可以是廣度優先搜索(BFS)或深度優先搜索(DFS),每個狀態都由分類器(通過提示)或多數投票進行評估。

任務拆解可以通過以下方式完成:(1)LLM使用簡單的提示,如“完成任務X需要a、b、c的步驟。\n1。”,“實現任務X的子目標是什么?”,(2)使用任務特定的指令;例如,“撰寫文案大綱。”,或者(3)通過交互式輸入指定需要操作的步驟。

自我反思(Self-Reflection)是一個非常重要的思想,它允許Agent通過改進過去的行動決策和糾正以前錯誤的方式來不斷提高。在可以允許犯錯和試錯的現實任務中,它發揮著關鍵作用。比如寫一段某個用途的腳本代碼。

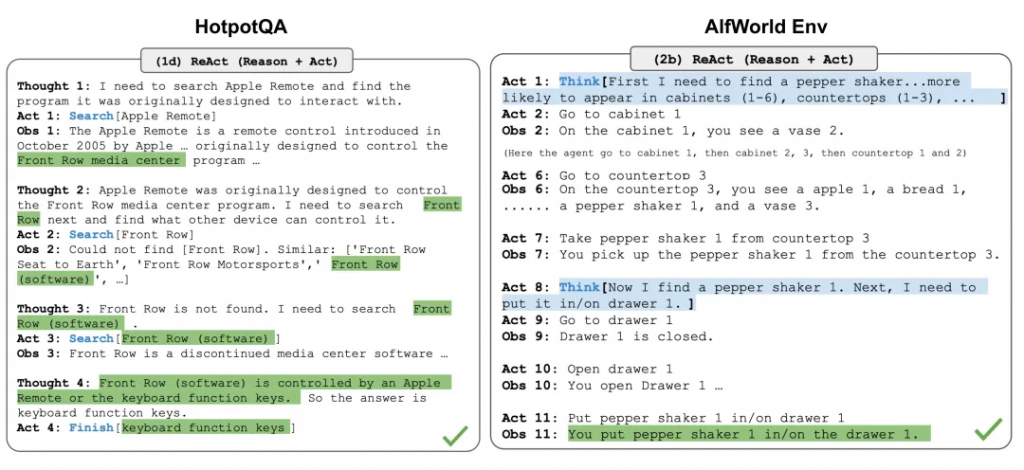

ReAct (Yao et al. 2023)通過將行動空間擴展為任務特定的離散行動和語言空間的組合,將推理和行動整合到LLM中。前者使LLM能夠與環境互動(例如使用搜索引擎API),而后者促使LLM生成自然語言中的推理軌跡。

ReAct的prompt template包含了明確的步驟,供LLM思考,大致格式如下:

Thought: ...

Action: ...

Observation: ...

... (Repeated many times)在對知識密集型任務和決策任務的兩個實驗中,ReAct都表現比僅包含行動(省略了“思考:…”步驟)更好的回答效果。

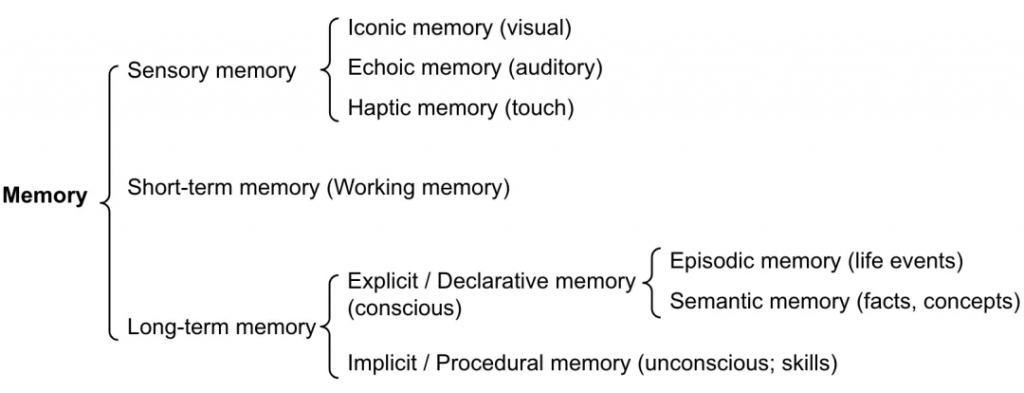

記憶可以定義為用于獲取、存儲、保留和以后檢索信息的過程。對于人類大腦,有幾種類型的記憶。

感覺記憶:這是記憶的最早階段,它使我們能夠在原始刺激結束后保留感覺信息(視覺、聽覺等)的能力。感覺記憶通常只持續幾秒鐘。子類別包括圖像記憶(視覺)、聲音記憶(聽覺)和觸覺記憶(觸覺)。

短期記憶(Short-Term Memory):它存儲我們當前意識到的信息,需要執行復雜的認知任務,如學習和推理。短期記憶的容量被認為約為7個項目(Miller 1956),持續時間為20-30秒。

長期記憶(Long-Term Memory):長期記憶可以存儲信息很長時間,范圍從幾天到數十年,具有本質上無限的存儲容量。長期記憶有兩個子類型:

顯式/陳述性記憶:這是關于事實和事件的記憶,指的是那些可以有意識地回憶起來的記憶,包括情節記憶(事件和經歷)和語義記憶(事實和概念)。

隱式/程序性記憶:這種記憶是無意識的,涉及自動執行的技能和例行程序,如騎自行車,在鍵盤上打字等。

我們可以粗略地考慮以下映射關系:

感覺記憶是為原始輸入內容(包括文本、圖像或其他模態),其可以在embedding之后作為輸入。

短期記憶就像上下文內容,也就是聊天歷史,它是短暫而有限的,因為受到Token長度的限制。

長期記憶就像Agent可以在查詢時參考的外部向量存儲,可以通過快速檢索訪問。

外部存儲可以緩解有限注意力跨度的限制。一個標準的做法是將信息的嵌入表示保存到一個向量存儲數據庫中,該數據庫可以支持快速的最大內積搜索(Maximum Inner Product Search)。為了優化檢索速度,常見的選擇是使用近似最近鄰(ANN)算法,以返回近似的前k個最近鄰,可以在略微損失一些準確性的情況下獲得巨大的速度提升。對于相似性算法有興趣的同學可以閱讀這篇文章《ChatGPT都推薦的向量數據庫,不僅僅是向量索引》。

使用工具可以使LLM完成一些其本身不能直接完成的事情。

Modular Reasoning, Knowledge and Language (Karpas et al. 2022)提出了一個MRKL系統,包含一組專家模塊,通用的LLM作為路由器,將查詢路由到最合適的專家模塊。這些模塊可以是其他模型(文生圖,領域模型等)或功能模塊(例如數學計算器、貨幣轉換器、天氣API)。現在最典型的方式就是使用ChatGPT的function call功能。通過對ChatGPT注冊和描述接口的含義,就可以讓ChatGPT幫我們調用對應的接口,返回正確的答案。

autogpt通過類似下面的prompt可以成功完成一些復雜的任務,比如review開源項目的代碼,給開源項目代碼寫注釋。最近看到了Aone Copilot,其主要focus在代碼補全和代碼問答兩個場景。那么如果我們可以調用Aone Copilot的API,是否也可以在我們推送mr之后,讓agent幫我們完成一些代碼風格,語法校驗的代碼review工作,和單元測試用例編寫工作。

You are {{ai-name}}, {{user-provided AI bot description}}.

Your decisions must always be made independently without seeking user assistance. Play to your strengths as an LLM and pursue simple strategies with no legal complications.

GOALS:

1. {{user-provided goal 1}}

2. {{user-provided goal 2}}

3. ...

4. ...

5. ...

Constraints:

1. ~4000 word limit for short term memory. Your short term memory is short, so immediately save important information to files.

2. If you are unsure how you previously did something or want to recall past events, thinking about similar events will help you remember.

3. No user assistance

4. Exclusively use the commands listed in double quotes e.g. "command name"

5. Use subprocesses for commands that will not terminate within a few minutes

Commands:

1. Google Search: "google", args: "input": "<search>"

2. Browse Website: "browse_website", args: "url": "<url>", "question": "<what_you_want_to_find_on_website>"

3. Start GPT Agent: "start_agent", args: "name": "<name>", "task": "<short_task_desc>", "prompt": "<prompt>"

4. Message GPT Agent: "message_agent", args: "key": "<key>", "message": "<message>"

5. List GPT Agents: "list_agents", args:

6. Delete GPT Agent: "delete_agent", args: "key": "<key>"

7. Clone Repository: "clone_repository", args: "repository_url": "<url>", "clone_path": "<directory>"

8. Write to file: "write_to_file", args: "file": "<file>", "text": "<text>"

9. Read file: "read_file", args: "file": "<file>"

10. Append to file: "append_to_file", args: "file": "<file>", "text": "<text>"

11. Delete file: "delete_file", args: "file": "<file>"

12. Search Files: "search_files", args: "directory": "<directory>"

13. Analyze Code: "analyze_code", args: "code": "<full_code_string>"

14. Get Improved Code: "improve_code", args: "suggestions": "<list_of_suggestions>", "code": "<full_code_string>"

15. Write Tests: "write_tests", args: "code": "<full_code_string>", "focus": "<list_of_focus_areas>"

16. Execute Python File: "execute_python_file", args: "file": "<file>"

17. Generate Image: "generate_image", args: "prompt": "<prompt>"

18. Send Tweet: "send_tweet", args: "text": "<text>"

19. Do Nothing: "do_nothing", args:

20. Task Complete (Shutdown): "task_complete", args: "reason": "<reason>"

Resources:

1. Internet access for searches and information gathering.

2. Long Term memory management.

3. GPT-3.5 powered Agents for delegation of simple tasks.

4. File output.

Performance Evaluation:

1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities.

2. Constructively self-criticize your big-picture behavior constantly.

3. Reflect on past decisions and strategies to refine your approach.

4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.

You should only respond in JSON format as described below

Response Format:

{

"thoughts": {

"text": "thought",

"reasoning": "reasoning",

"plan": "- short bulleted\n- list that conveys\n- long-term plan",

"criticism": "constructive self-criticism",

"speak": "thoughts summary to say to user"

},

"command": {

"name": "command name",

"args": {

"arg name": "value"

}

}

}

Ensure the response can be parsed by Python json.loadsLangChain已經內置了很多agent實現的框架模塊,主要包含:

toolkits主要通過注冊機制向agent返回一系列可以調用的tool。其基類代碼為BaseToolkit。

class BaseToolkit(BaseModel, ABC):

"""Base Toolkit representing a collection of related tools."""

@abstractmethod

def get_tools(self) -> List[BaseTool]:

"""Get the tools in the toolkit."""我們可以通過繼承BaseToolkit的方式來實現不同的toolkit,每一個toolkit都會實現一系列的tools,一個Tool則包含幾個部分,必須要包含的內容有name,description。通過這幾個字段來告知LLM這個工具的作用和調用方法,這里就要求注冊的tool最好能夠通過name明確表達其用途,同時也可以在description中增加few-shot來做調用example,使得LLM能夠更好地理解tool。同時在LangChain內部已經集成了很多工具,我們可以直接調用這些工具來組成Tools。

class BaseTool(BaseModel, Runnable[Union[str, Dict], Any]):

name: str

"""The unique name of the tool that clearly communicates its purpose."""

description: str

"""Used to tell the model how/when/why to use the tool.

You can provide few-shot examples as a part of the description.

"""

...

class Tool(BaseTool):

"""Tool that takes in function or coroutine directly."""

description: str = ""

func: Optional[Callable[..., str]]

"""The function to run when the tool is called."""接下來我們做一個簡單的agent demo,這個agent主要做兩件事情。

1. 從網上檢索收集問題需要的數據

2.利用收集到的數據進行科學計算,回答用戶的問題。

在這個流程中,我們主要用到Search和Calculator兩個工具。

from langchain.agents import initialize_agent, AgentType, Tool

from langchain.chains import LLMMathChain

from langchain.chat_models import ChatOpenAI

from langchain.llms import OpenAI

from langchain.utilities import SerpAPIWrapper

llm = ChatOpenAI(temperature=0, model="gpt-3.5-turbo-0613")

search = SerpAPIWrapper()

llm_math_chain = LLMMathChain.from_llm(llm=llm, verbose=True)

tools = [

Tool(

name = "Search",

func=search.run,

description="useful for when you need to answer questions about current events. You should ask targeted questions"

),

Tool(

name="Calculator",

func=llm_math_chain.run,

description="useful for when you need to answer questions about math"

)

]

agent = initialize_agent(tools, llm, agent=AgentType.OPENAI_FUNCTIONS, verbose=True)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?") > Entering new chain...

Invoking: Search with {'query': 'Leo DiCaprio girlfriend'}

Amidst his casual romance with Gigi, Leo allegedly entered a relationship with 19-year old model, Eden Polani, in February 2023.

Invoking: Calculator with {'expression': '19^0.43'}

> Entering new chain...

19^0.43```text

19**0.43

```

...numexpr.evaluate("19**0.43")...

Answer: 3.547023357958959

> Finished chain.

Answer: 3.547023357958959Leo DiCaprio's girlfriend is reportedly Eden Polani. Her current age raised to the power of 0.43 is approximately 3.55.

> Finished chain.

"Leo DiCaprio's girlfriend is reportedly Eden Polani. Her current age raised to the power of 0.43 is approximately 3.55."可以看到,這個agent可以成功地完成意圖檢索尋求知識和科學計算得到結果。

這個case是結合大模型和數據庫,通過查詢表里的數據來回答用戶問題,用的關鍵prompt為

_postgres_prompt = """You are a PostgreSQL expert. Given an input question, first create a syntactically correct PostgreSQL query to run, then look at the results of the query and return the answer to the input question.

Unless the user specifies in the question a specific number of examples to obtain, query for at most {top_k} results using the LIMIT clause as per PostgreSQL. You can order the results to return the most informative data in the database.

Never query for all columns from a table. You must query only the columns that are needed to answer the question. Wrap each column name in double quotes (") to denote them as delimited identifiers.

Pay attention to use only the column names you can see in the tables below. Be careful to not query for columns that do not exist. Also, pay attention to which column is in which table.

Pay attention to use CURRENT_DATE function to get the current date, if the question involves "today".

Use the following format:

Question: Question here

SQLQuery: SQL Query to run

SQLResult: Result of the SQLQuery

Answer: Final answer here

"""下面是實際的工作代碼,目前在這個場景,openai的推理能力最強,能夠正確完成這個復雜的Agent工作。

## export your openai key first export OPENAI_API_KEY=sk-xxxxx

from langchain.agents import create_sql_agent

from langchain.agents.agent_toolkits import SQLDatabaseToolkit

from langchain.agents import AgentExecutor

from langchain.llms.tongyi import Tongyi

from langchain.sql_database import SQLDatabase

import psycopg2cffi as psycopg2 # pip install psycopg-binary if on linux, just use psycopg2

from langchain.chat_models import ChatOpenAI

db = SQLDatabase.from_uri('postgresql+psycopg2cffi://admin:password123@localhost/admin')

llm = ChatOpenAI(model_name="gpt-3.5-turbo")

toolkit = SQLDatabaseToolkit(db=db,llm=llm)

agent_executor = create_sql_agent(

llm=llm,

toolkit=toolkit,

verbose=True

)

agent_executor.run("using the teachers table, find the first_name and last name of teachers who earn less the mean salary?")可以看到大模型經過多輪思考,正確回答了我們的問題。

> Entering new AgentExecutor chain...

Action: sql_db_list_tables

Action Input: ""

Observation: teachers

Thought:I can query the "teachers" table to find the first_name and last_name columns.

Action: sql_db_schema

Action Input: "teachers"

Observation:

CREATE TABLE teachers (

id INTEGER,

first_name VARCHAR(25),

last_name VARCHAR(50),

school VARCHAR(50),

hire_data DATE,

salary NUMERIC

)

/*

3 rows from teachers table:

id first_name last_name school hire_data salary

None Janet Smith F.D. Roosevelt HS 2011-10-30 36200

None Lee Reynolds F.D. Roosevelt HS 1993-05-22 65000

None Samuel Cole Myers Middle School 2005-08-01 43500

*/

Thought:I can now construct a query to find the first_name and last_name of teachers who earn less than the mean salary.

Action: sql_db_query

Action Input: "SELECT first_name, last_name FROM teachers WHERE salary < (SELECT AVG(salary) FROM teachers) LIMIT 10"

Observation: [('Janet', 'Smith'), ('Samuel', 'Cole'), ('Samantha', 'Bush'), ('Betty', 'Diaz'), ('Kathleen', 'Roush')]

Thought:Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 / min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..

Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 / min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..

Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 / min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..

Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 8.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 / min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..

The first_name and last_name of teachers who earn less than the mean salary are Janet Smith, Samuel Cole, Samantha Bush, Betty Diaz, and Kathleen Roush.

Final Answer: Janet Smith, Samuel Cole, Samantha Bush, Betty Diaz, Kathleen Roush

> Finished chain.

'Janet Smith, Samuel Cole, Samantha Bush, Betty Diaz, Kathleen Roush'和ChatBot不同,agent的構建是對LLM的推理能力提出了更高的要求。ChatBot的回答可能是不正確的,但這依然可以通過人類的判別回饋來確定問答結果是否有益,對于無效的回答可以容忍地直接忽略或者重新回答。 但是agent對模型的錯誤判斷的容忍度則更低。雖然我們可以通過自我反思機制減少agent的出錯率,但是其當前可以應用的場景依然較小。需要我們不斷去探索和開拓新的場景,同時不斷提高大模型的推理能力,從而能夠搭建更加復雜的agent。

同時,agent目前能夠在比較小的場景勝任工作,比如我們的意圖是明確的,同時也只給agent提供了比較少量的toolkit來執行任務(10個以內),且每個tool的用差異明顯,在這種情況下,LLM能夠正確選擇tool進行任務,并得到期望的結果。但是當一個agent里注冊了上百個甚至更多工具時,LLM就可能無法正確地選擇tool執行操作了。這里的一個解法是通過多層agent樹的方式來解決,父agent負責路由分發任務給不同的子agent。每一個子agent則僅僅包含和使用有限的toolkit來執行任務,從而提高agent復雜場景的任務完成率。

云原生數據倉庫 AnalyticDB 是一種大規模并行處理數據倉庫服務,可提供海量數據在線分析服務。在云原生數據倉庫能力上全自研企業級向量引擎,支持流式向量數據寫入、百億級向量數據檢索;支持結構化數據分析、向量檢索和全文檢索多路召回,支持對接通義千問等國內外主流大模型。

了解更多 AnalyticDB 介紹和相關解決方案請參考:

AnalyticDB向量引擎介紹:https://www.aliyun.com/activity/database/adbpg_vector

一鍵部署PAI+通義千問+AnalyticDB向量引擎搭建ChatBot:https://computenest.console.aliyun.com/user/cn-hangzhou/serviceInstanceCreate?ServiceId=service-ddfecdd9b626465f85b6

文章轉自微信公眾號@阿里云開發者